Serviced mux issue causing huge CPU spike

| Subject: |

Serviced mux issue causing huge CPU spike |

| Author: |

Jad Baz |

| Posted: |

2019-03-27 16:06 |

My issues with Zenoss keep getting weirder (ref this, and this)

I have 2 machines with Zenoss 6.2.1 and control center 1.5.1

They are both CentOS 7 servers with 16 GB of ram and 4 cores each

They are on the same VMware infrastructure, but otherwise, completely separate and independent

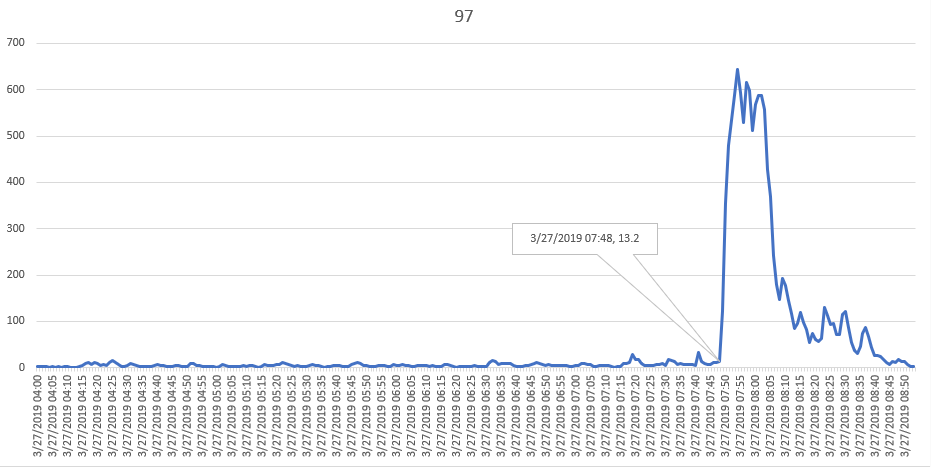

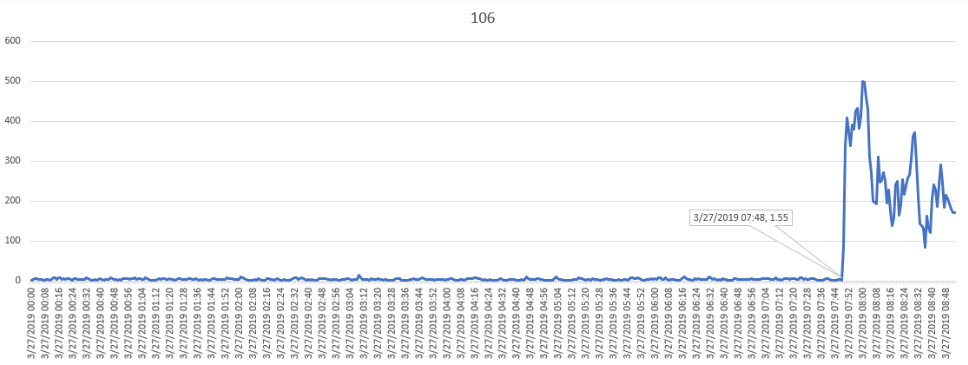

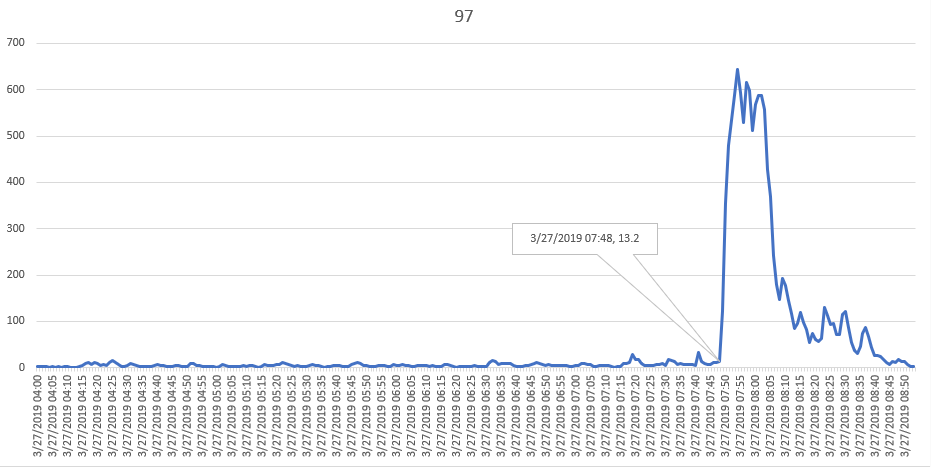

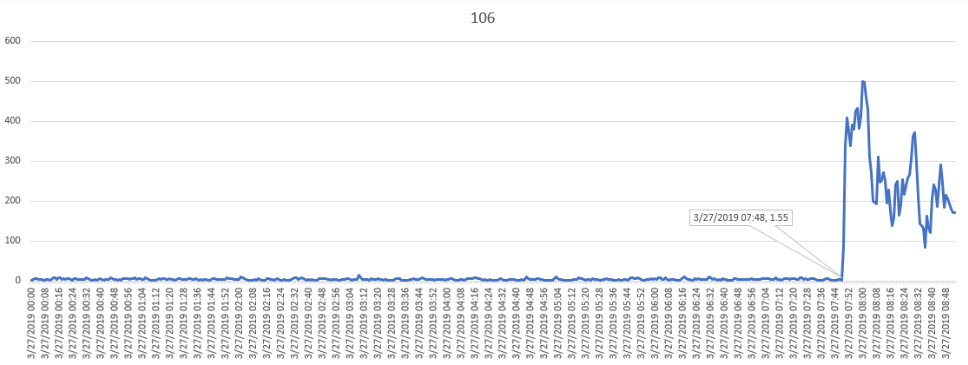

On Mar 27 at 7:49, they both saw an almost identical spike in CPU usage

Check out the below:

I did not include memory usage in this graph but the "available memory" was about 1.5 GB before the CPU spike and went down to around 500 MB during

I dug into the serviced logs (journalctl -u serviced -l) and found the following on one server:

Mar 27 07:48:58 testserver1 serviced[14267]: time="2019-03-27T12:48:57Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.30:49544: i/o timeout" location="mux.go:148"

Mar 27 07:49:09 testserver1 serviced[14267]: time="2019-03-27T12:49:09Z" level=warning msg="Unable to predict storage availability" error="Post http://127.0.0.1:8888/api/performance/query: net/http: request canceled (Client.Timeout exceeded while awaiting

Mar 27 07:49:24 testserver1 serviced[14267]: time="2019-03-27T12:49:23Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.32:51586: i/o timeout" location="mux.go:148"

Mar 27 07:49:24 testserver1 serviced[14267]: time="2019-03-27T12:49:24Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.36:45542: i/o timeout" location="mux.go:148"

Mar 27 07:49:24 testserver1 serviced[14267]: time="2019-03-27T12:49:24Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.14:51520: i/o timeout" location="mux.go:148"

Mar 27 07:49:25 testserver1 serviced[14267]: time="2019-03-27T12:49:24Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=1

Mar 27 07:49:25 testserver1 serviced[14267]: time="2019-03-27T12:49:24Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.39:37268: i/o timeout" location="mux.go:148"

Mar 27 07:49:25 testserver1 serviced[14267]: time="2019-03-27T12:49:24Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.18:48252: i/o timeout" location="mux.go:148"

Mar 27 07:49:33 testserver1 serviced[14267]: time="2019-03-27T12:49:30Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=1

Mar 27 07:49:33 testserver1 serviced[14267]: time="2019-03-27T12:49:30Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=1

Mar 27 07:49:37 testserver1 serviced[14267]: time="2019-03-27T12:49:37Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=1

Mar 27 07:49:37 testserver1 serviced[14267]: time="2019-03-27T12:49:37Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.18:48330: i/o timeout" location="mux.go:148"

Mar 27 07:49:37 testserver1 serviced[14267]: time="2019-03-27T12:49:37Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.33:51162: i/o timeout" location="mux.go:148"

Mar 27 07:49:37 testserver1 serviced[14267]: time="2019-03-27T12:49:37Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.32:51630: i/o timeout" location="mux.go:148"

Mar 27 07:49:37 testserver1 serviced[14267]: time="2019-03-27T12:49:37Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.40:56240: i/o timeout" location="mux.go:148"

And the following on the other server:

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=172.17.0.27:36600

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=172.17.0.18:55270

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=172.17.0.18:55296

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.31:49550: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.31:49550

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=172.17.0.22:55274

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.13:51554: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.13:51554

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.13:51552: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.13:51552

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.22:55466: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.22:55466

Mar 27 07:48:57 testserver2 serviced[18596]: W0327 12:48:57.884612 18596 connection.go:204] Could not ping connection to /: coord-client: connection is closed

Mar 27 07:49:00 testserver2 serviced[18596]: I0327 12:49:00.466863 18596 connection.go:217] Refreshing connection to zookeeper

Mar 27 07:49:13 testserver2 serviced[18596]: time="2019-03-27T12:49:13Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.32:57974: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.32:57974

Mar 27 07:49:16 testserver2 serviced[18596]: time="2019-03-27T12:49:16Z" level=warning msg="Health check failed" error="healthcheck timed out" healthcheck=running location="container.go:907" logger=isvcs

Mar 27 07:49:29 testserver2 serviced[18596]: time="2019-03-27T12:49:29Z" level=warning msg="Health check failed" error="healthcheck timed out" healthcheck=hasQuorum location="container.go:907" logger=isvcs

Mar 27 07:49:29 testserver2 serviced[18596]: time="2019-03-27T12:49:29Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.27:39486: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.27:39486

Mar 27 07:49:34 testserver2 serviced[18596]: time="2019-03-27T12:49:34Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.97:22250->172.17.0.22:58444: i/o timeout" location="mux.go:148" logger=proxy remoteaddr=172.17.0.22:58444

Notice the small time difference (14s) between the first 2 logs on both machines

Mar 27 07:48:44 testserver2 serviced[18596]: time="2019-03-27T12:48:42Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="Read too few bytes for message body" location="mux.go:148" logger=proxy remoteaddr=172.17.0.27:36600

Mar 27 07:48:58 testserver1 serviced[14267]: time="2019-03-27T12:48:57Z" level=warning msg="Unable to read valid mux header. Closing connection" address=[::]:22250 error="read tcp 192.168.1.106:22250->172.17.0.30:49544: i/o timeout" location="mux.go:148"

Note that both of the above are the first warnings about this issue on March 27 and they happened to be only 14 seconds apart

What is really curious as well as that this always seems to happen around 8am (EST) and on all 3 occasions, we saw the same behavior (big CPU spike), though some were worse than others

[root@testserver1 ~]# journalctl -u serviced -l | grep "Unable to read valid mux header" | cut -d : -f1,2 | uniq -c

4 Mar 21 07:53

4 Mar 23 08:01

1 Mar 27 07:48

71 Mar 27 07:49

85 Mar 27 07:50

Note, finally, that I have several services running on the same server but that during the spike, they were mostly dormant and were not talking to Zenoss

2 conclusions seem inevitable:

- The CPU spike is directly related to the serviced error or both have a common ancestor

- The 2 incidents on both servers have a common cause. This could be one of 2 things:

- It is some scheduled job that ran at the same time on both machines at the same time

- An infrastructure issue caused the error to appear on both machines at the same time

#1 is likely because it always seems to happen around 8am (but not every day either)

But #2 is also likely since this looks like a network issue (mux.go#L148)

Concerning the issue itself, I think we need to understand 3 things:

- What exactly is TCP Multiplexer and what does it do? Who connects to it? Why does it run over https? (I have SERVICED_MUX_DISABLE_TLS=0)

- Why is the issue happening?

- And when it does happen, why does does it cause such a huge CPU usage spike?

I would really appreciate any pointers as I'm really struggling to make sense of all this

(Also posted to github.com/control-center/serviced/issues/3736)

------------------------------

Jad

------------------------------

| Subject: |

RE: Serviced mux issue causing huge CPU spike |

| Author: |

Jad Baz |

| Posted: |

2019-03-28 07:07 |

As an update on this

I have another environment in a different network entirely that never had the "mux header" issue

So I think this rules out the "scheduled job" hypothesis

------------------------------

Jad

------------------------------

| Subject: |

RE: Serviced mux issue causing huge CPU spike |

| Author: |

Jad Baz |

| Posted: |

2019-03-29 05:27 |

Another update on this is that disconnecting the network does not reproduce the issue

------------------------------

Jad

------------------------------